How smart are Smart Assistants? looking at Siri, Alexa, Cortana & Co.

Nowadays, smart assistants can be found almost everywhere - in mobile phones, homes, cars. They are called Siri, Alexa, Cortana, Google Assistant, Bixby & Co. Their intention is to act as a personal assistant and make our lives easier: We tell them what we want, and they do it. Well, at least they should. But sometimes, or as some would say most of the time, it just doesn't work. Either they don't understand us or they do understand us but misinterpret or give the wrong answers to our questions.

We recently held a Webinar on why this is happening, and what the challenges for modern technology and Artificial Intelligence (AI) are. Our Director of Product Management and Natural Language Processing (NLP) expert Christian Schömmer, delivered fascinating insights on how the different modules need to interweave to create a digital assistant that works perfectly. This blog post is a summary of the key talking points of the webinar.

1. History of smart assistants

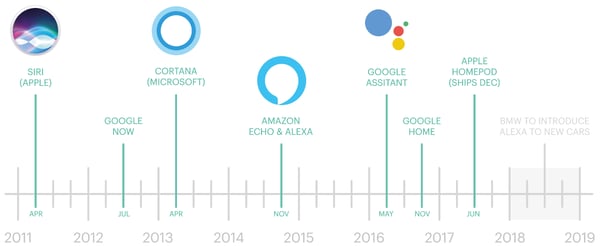

Smart assistants have been around for quite a while as the chart below shows. Apple started the voice assistant revolution with Siri in 2011 and was followed by Google, Microsoft, Amazon, and many others in the following years.

Source: https://www.theoneoff.com/journal/the-rise-of-vui/

An interesting development is the market share of these digital assistants. Whereas Apple's Siri was the first one on the market and kept position 1 for many years in terms of installed base by brand, others will be taking over shortly. According to recent predictions, Samsung's S-Voice/Bixby, as well as Amazon's Alexa, will surpass Siri already in the next year, followed by Chinese Assistants from 2020.

2. Examples of when smart assistants go wrong

Have you ever tried asking your smart assistant questions like "What is the meaning of life?", "What should I cook tomorrow?" or "Can you help me write that article?" If not, give it a try and you will realize that your smart assistant is not as smart as it promises to be. There are loads of examples on the internet for instances when our smart assistants are not that smart at all.

They might insult your family:

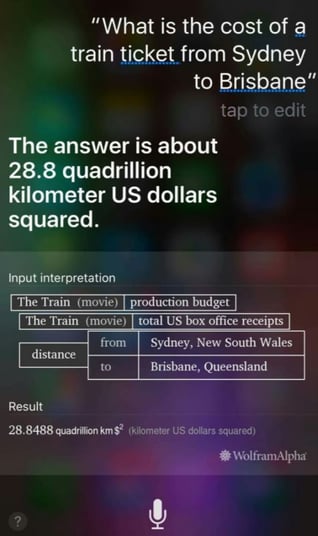

Give you very complex answers to very simple questions:

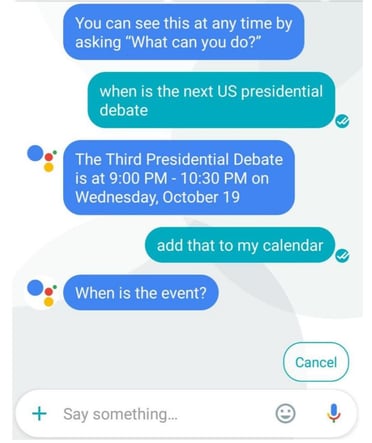

Or forget everything you were just communicating about in a blink of an eye - just like a goldfish:

This list can go on and on. Whereas all these examples are quite funny, it is also fair to say that the more time goes by and the more development is put into all of these smart assistants, the smarter they actually get.

3. Use cases of smart assistants

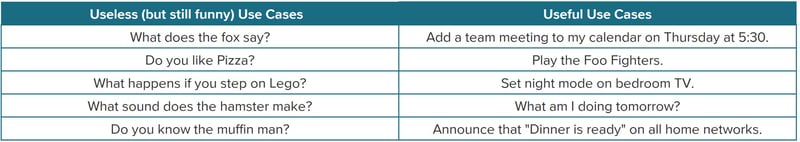

With no doubt, the value of the answers of digital assistants also depends on the questions that they get asked. There are different use cases - the ones to have fun (the level of humor is different for every smart assistant) and the ones that are actually really useful. The following table shows some examples of both:

The main use case currently is probably adding meetings or playing a certain kind of music. However, the more connected homes will evolve, the more smart assistants will be tasked to dim lights, open doors or close the window shutters.

4. Accuracy of smart assistants

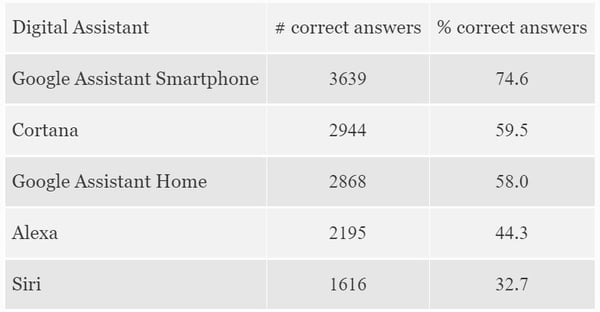

For all above-mentioned use cases, and many others, the accuracy of smart assistants varies from brand to brand. The digital marketing agency Stone Temple recently conducted an accuracy test with the top 5 digital assistants: Google Assistant (Smartphone/Home), Cortana, Alexa, and Siri. They asked 4,492 questions and classified the answers as "fully and completely correct" if the user got 100% of the information that they asked for in their question.

The results vary enormously: Google Assistant for smartphones is way ahead of all other tested smart assistants - with more than twice correct answers than Apple's Siri.

Source: Stone Temple via Forbes

5. How do smart assistants work?

To demonstrate how smart assistants work, let's do a comparison between Sam and Smarty. Sam is a human being and a personal assistant to Robert. Smarty is Robert's digital assistant. Robert wants to find out if Smarty is better than Sam - and is giving both of them the same task:

"Schedule a meeting with Peter on Friday."

To act on this request, the following things need to happen for both, Sam and Smarty:

- Speech to text

- Text to semantics

- Semantics to action

It is an easy task for Sam because he has all the context and experience. This is what happens in his head:

"No problem!

Sure, it can only be your business partner Peter Green as you just talked to him.

It can only be next Friday cause this Friday is a public holiday.

You usually meet for 1 hour, so I just go with that.

I‘ll put it in both of your calendars."

So, within a very short time frame and without any additional questions he completes the task successfully.

For Smarty, this task is a bigger challenge because a lot of things need to interweave perfectly in the three steps in order to fulfill this request:

- Speech to text:

- The audio signal emitted from the user needs to be recorded, digitized and then transformed into a text representation, i.e. a sequence of words.

- Example: In this request, Smarty needs to understand that Robert is talking about a meeting and not a meat thing. Intelligent, NLP-based technology can predict the correct meaning based on local grammar and dictionaries.

- Text to semantics:

- The words need to be analyzed for syntactical structures and meaning, i.e. transformed into some form of semantic representation. Smarty needs to know which Peter is meant, if Friday is automatically next Friday and understand different versions of Schedule a meeting, e.g. Schedule an appointment or Put something on my calendar.

- Example: In computer language, Robert's request needs to be something like this:

ACTION: sched_meeting

PARTICIPANT: Peter Green

TIME: Friday, October 25th, 2018

DURATION: ? (Default 1h)

- Semantics to action:

- To be useful, the semantic representation must interpreted as a sequence of operations, in this instance, e.g. opening the calendar app, creating a new appointment, adding Peter to the attendees, asking the user for confirmation and then saving it.

- Example: For a simple case like this one, the request can be solved using apps and services. Smarty has direct access to Robert's calendar and can programmatically check Peter's availability and put the meeting in the earliest overlapping free slot during work hours.

A single mistake at any step in this process will make both Smarty and Sam miss Robert's intent and therefore do the wrong thing – in Smarty's case, this always seems to be a web search – or nothing at all – probably the default for Sam.

Learn more

You want to dive deeper into the limits and opportunities of smart assistants? If so, we recommend that you watch the recording of our webinar "Smart Assistants, why are you so dumb?" This webinar is important to anyone interested in the advancement of NLP and its applications and use in everyday life and in business. Information about these advancements (or lack of) is especially important for healthcare providers and legal professionals who process tremendous amounts of data from voice and voice-to-text.

Photo credit header image: natali_mis via Fotolia